How I Use LLMs: Practical AI Applications for Lawyers & Practice Management

This blog post is for informational purposes only and does not constitute legal advice. For specific legal concerns, please consult a qualified attorney.

As a solo lawyer, LLMs have been a huge support in helping me set up and manage my firm’s business and internal operations.

Through hundreds of hours of use, research, and ongoing experimentation, I've learned some things that have improved my efficiency and allowed me to spend more time on high-value, strategic work.

Below I’ll share my approach to working with LLMs on behalf of my businesses, including a detailed use case example, and anecdotal observations about their relative strengths and weaknesses.

Disclaimer: This post is intended as food for thought for legal professionals looking for ways to use LLMs more thoughtfully and effectively. These approaches and examples may or may not be relevant to your use case, could change if the technology does, and don’t necessarily reflect how I would use LLMs for specific client matters (which would always be discussed in advance).

Understanding the Robot

Something clicked for me when I stopped thinking about LLMs like search engines and started thinking about them like super bright, overly eager new hires who have studied everything on the internet but have terrible short-term memory.

Asking myself “what would a new hire need for this task?” gives me an intuitive sense of the kinds of questions I can ask LLMs and the information I need to give them to do it. This has fundamentally changed how I interact with them.

Like a new hire, assigning one kind of task at a time, giving clear instructions, and providing only information relevant to the task has worked way better for me than unloading my to-do list and inbox in one prompt.

Building Trust

For me, one of the biggest initial challenges with LLMs was getting a general sense of how much I could trust them with different kinds of tasks in different contexts.

Unlike math or coding, LLMs are notoriously difficult to evaluate on tasks with no clear and generally accepted “right” answer (so-called “non-verifiable” tasks. There are many reasons for this.

For practical purposes, the most effective way I’ve found to initially gauge this (which I didn’t invent) is to give the model questions or tasks where I already know what the expected output should look like. Recording my stream-of-consciousness thoughts and then seeing an LLM clearly and accurately summarize them went a long way.

Asking Questions

I’ve also discovered how important it is to learn how to ask. For somewhat mysterious reasons that are still being actively studied, LLM responses can be drastically different depending on how the question is phrased.

Early on, I spent an inordinate amount of time reading research paper abstracts (and sometimes entire papers) and creating prompt templates based on my interpretation of the research. I don’t think this is necessary for effective prompting.

LLMs are way, way better (and faster) at prompting than we are. I almost always record myself verbally describing the task and background, then ask an LLM to create a prompt based on the transcript.

Even if the first shot isn’t perfect, it’s so much faster to edit an existing prompt that I almost never do anything meaningful or complex without recording my thoughts and asking a reasoning LLM to craft a prompt I can use for the task.

Foundation Data

Maintaining a structured repository of custom instructions, LLM-polished prompts, and concise contextual data points (what I call foundation data) has made working with LLMs much more consistent, fluid, and relevant. In my case, foundation data includes key facts about my firm that I am comfortable sharing publicly, voice and style guidelines, ethical rules, and templates I use frequently.

I can add these structured data points to LLM context in many different ways (prompts, custom instructions, document attachments) and they work with any LLM.

At this point, I can usually “onboard” a new model (bring it up to speed on my business + set up customized workflows) in about ten minutes.

Managing the Context Window

It's also important to manage the “context window”, which is basically how much information the LLM can handle at once.

Despite what the marketing says, current LLMs start to struggle after about 20 pages of text. Even if your prompts and follow ups are short, LLMs tend to produce voluminous text and it adds up quickly.

The goal is to keep everything clear and concise, giving the LLM just enough context without overwhelming it.

In my experience, the more time an LLM spends trying to figure out what I mean, the less time it spends working on what I want.

Since everything in the chat, attachments, and the LLMs “thought process” (if it’s a reasoning model) counts, I usually start a new chat after the first couple of exchanges to keep it sharp.

Project folders can make this a lot easier, as you can create multiple chat windows that are all tied to the same “library” of documents.

Confidentiality

I’ll briefly touch on how I think about confidentiality when working with LLMs day-to-day. I’ve posted a more in-depth look at the ethical confidentiality implications of LLM use in law practice here.

Over time I’ve discovered that many (maybe most?) of the tasks I give LLMs don’t require me to share identifying information at all.

Useful and relevant legal research can be completed by simply providing the model generic frameworks or scenario-based descriptions (in other words, how it’s done in law school).

When asking an LLM to draft or review a contract provision, party names or exact dates usually aren't necessary. The model needs only the foundational structure or key terms, which typically aren’t inherently identifiable.

To create “generic” input, I would approach LLM interactions similarly to how I might describe a legal issue in an elevator with a colleague. I’d describe the core facts, challenges, or questions generally enough to preserve confidentiality (e.g., omitting party names, locations, etc.), while still clearly outlining the issue.

This is a skill most attorneys have already naturally developed in practice, by necessity. I think it translates well to LLM interactions.

Use Case: Research

I still use Google for quick searches for basic information, but if I need a comprehensive look at a specific area I’ve found tremendous value starting with web-enabled “deep research” tools.

This can be high variance in my experience, but if the subject matter is well-documented and doesn’t involve math the results can be astonishing.

Even when it gets specifics wrong (which does happen), it generally puts me in the right neighborhood for what I need to be thinking about.

Vetting sources and double checking facts is tedious, but it’s far faster than building a report by hand.

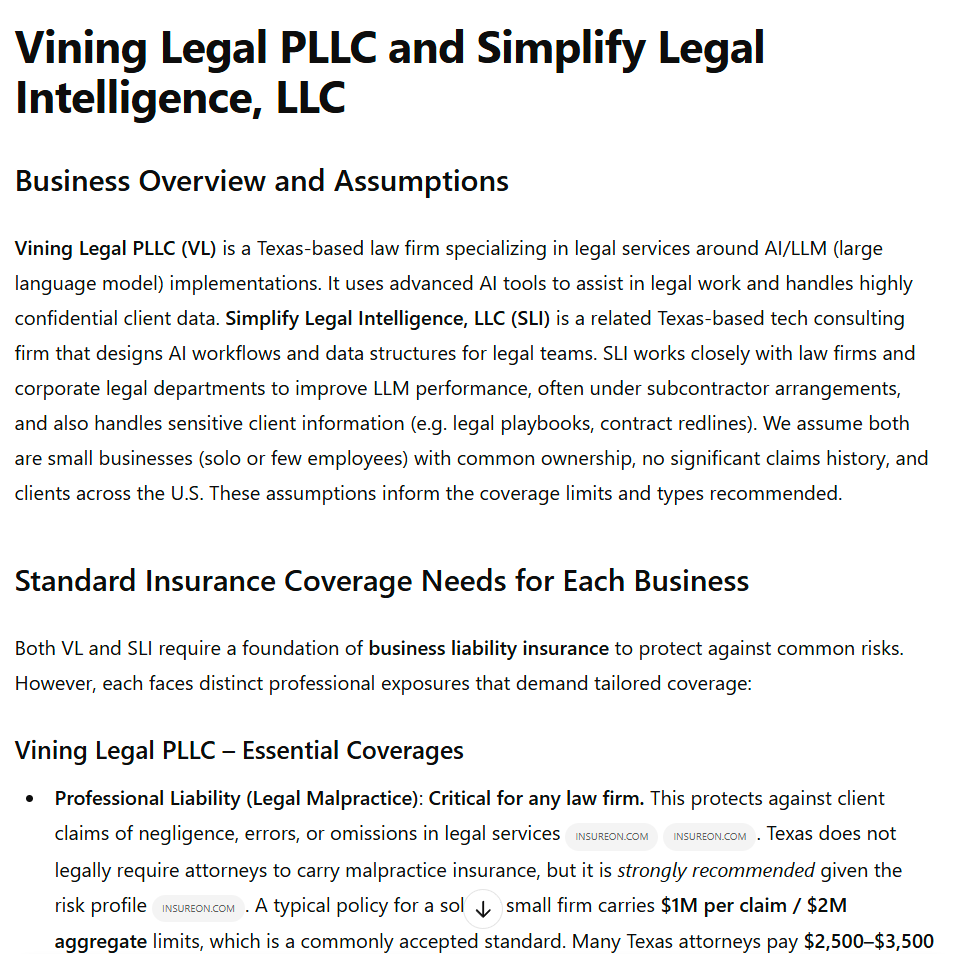

Example: When I was starting my practice, I needed to figure out what kinds of insurance coverage clients would expect the firm to have. Very talented finance people had always had the wheel on this for the first 15 years of my career, so I was fairly clueless.

Step 1. I gave an “offline” LLM some foundation data about my business and asked it to create a prompt for insurance research.

Step 2. The prompt engineer LLM produced a targeted research prompt narrowly tailored to my businesses and circumstances.

Step 3. I gave the prompt to the deep research LLM. It asked me a few follow up questions, which to me looked like they were written by very talented finance people.

Step 4. I answered the questions, and the LLM came back with an extremely detailed report (just under 30 pages) that covered a lot of ground, and in a way that was specifically relevant to my situation.

I don’t know what I would have paid a consultant for this, but probably more than the LLM provider’s subscription fee.

To me, output like this shows the value of high quality foundation data. The net result was a much easier time shopping for and selecting coverage, and a path less painful through a new domain.

Conclusion

Having LLMs to support my practice has significantly reduced the time I’ve spent mired in operational details, and allowed me to focus more on practice development and long-term goals.

I have no doubt my approach will evolve as these LLMs do, but I hope you’ve found something here useful in the meantime.

Thanks for reading, and may you be well

Jace